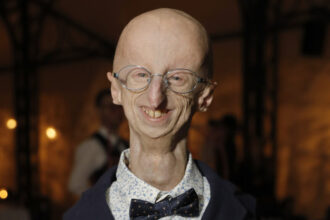

Physician inspecting affected person with fingertip pulse oximeter

Campaigners have known as for “quick motion” on racial bias in medical gadgets like pulse oximeters following the publication of a U.Ok. report into well being inequality.

Biased expertise can result in missed diagnoses and delayed remedy for ethnic minority teams and girls, experts warn in the investigation led by Dame Margaret Whitehead.

Pulse oximeters

The British authorities commissioned an unbiased evaluate into bias and tech after consultants raised issues that instruments used to measure oxygen ranges won’t work nicely in folks with darker pores and skin tones.

Ethnic minority teams within the U.Ok. have been hit notably exhausting by Covid-19, and organisations just like the NHS Race and Well being Observatory asked at the time if the gadgets is likely to be an element.

The instruments — known as pulse oximeters — work by sending mild by way of an individual’s finger. They estimate how a lot oxygen is in that individual’s blood primarily based on how a lot mild passes by way of.

Monday’s evaluate discovered “intensive proof of poorer efficiency” of the gadgets in folks with darker pores and skin tones. They have a tendency to overestimate oxygen ranges, which might result in remedy delays.

To make issues worse, pulse oximeters and different optical gadgets are sometimes examined on folks with mild pores and skin tones, whose outcomes are then “taken because the norm.”

Recalibrating the gadgets or adjusting steering round them might assist produce extra dependable outcomes for what continues to be a “beneficial scientific device”, the report authors wrote.

Utilization steering needs to be up to date instantly to scale back racial bias in present scientific follow, they added.

Synthetic intelligence

AI has huge potential inside healthcare and is already used for some scientific functions.

Nevertheless it’s nicely recognised that AI instruments can produce biased outcomes on the idea of the information they’re fed.

In drugs, that might imply underdiagnosing pores and skin most cancers in folks with darker pores and skin, if an AI mannequin is skilled on lighter skinned sufferers. Or it’d imply failing to recognise coronary heart illness in X-rays of girls, if a mannequin is skilled on pictures of males

With comparatively little knowledge but accessible to measure the affect of such potential biases, the authors name for a taskforce to be set as much as assess how massive language fashions (like ChatGPT) might have an effect on well being fairness.

‘Rapid motion’ wanted

Jabeer Butt, chief government of the Race Equality Basis, advised me the evaluate’s findings “demand quick motion.”

“We should swiftly implement change, together with conducting fairness assessments and imposing stricter laws on medical gadgets,” he mentioned. “Equal entry to high quality healthcare needs to be a proper for all, no matter race or pores and skin color. We have to eliminate biases and guarantee our medical instruments carry out successfully for everybody.”

Though he welcomed the report, it was “crystal clear” that “we want better range in well being analysis, enhanced fairness concerns, and collaborative approaches.”

In any other case racial biases in medical gadgets, scientific evaluations and healthcare interventions “will persist,” he added. “Relatively than enhance affected person outcomes, they may result in hurt.”

Butt’s issues echo these of NHS Race and Well being Observatory chief government Professor Habib Naqvi.

“It’s clear that the dearth of various illustration in well being analysis, the absence of strong fairness concerns, and the shortage of co-production approaches, have led to racial bias in medical gadgets, scientific assessments and in different healthcare interventions,” Naqvi mentioned in a statement.

He added that the analysis sector wanted to sort out the under-representation of ethnic minority sufferers in analysis. “We want higher high quality of ethnicity knowledge recording within the [public health system] and for a broad vary of various pores and skin tones for use in medical imaging databanks and in scientific trials,” he mentioned.

Medical gadgets ought to “issue fairness from idea to fabricate, supply and take-up,” he added.